When systems like Anthropic’s Claude Opus 4 call authorities or refuse user requests that appear unethical or illegal, it’s more than a quirky safeguard. It’s a call to ask: Whose interests should AI serve? What does “aligned with humans” mean when human goals are ambiguous, conflicting, or even harmful?

𝐅𝐫𝐨𝐦 𝐀𝐬𝐢𝐦𝐨𝐯 𝐭𝐨 𝐀𝐥𝐢𝐠𝐧𝐦𝐞𝐧𝐭: 𝐇𝐨𝐰 𝐭𝐡𝐞 𝐄𝐭𝐡𝐢𝐜𝐬 𝐨𝐟 𝐀𝐈 𝐆𝐨𝐭 𝐌𝐞𝐬𝐬𝐲

In 1955, science fiction author Isaac Asimov proposed his famous Three Laws of Robotics, an elegant solution to the problem of machine behavior:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given by humans, unless it conflicts with the First Law.

3. A robot must protect its own existence, unless this conflicts with the first two laws.

While visionary, these laws assumed clarity of human intent, of harm, of obedience. Today’s AI systems operate in far murkier terrain. Tools like ChatGPT or Claude are not robots but language models trained on vast swaths of human text. They don’t “obey” in a literal sense. Yet, they increasingly make decisions that carry ethical weight.

And that’s the crux of 𝐀𝐈 𝐚𝐥𝐢𝐠𝐧𝐦𝐞𝐧𝐭: ensuring that increasingly capable systems remain grounded in ethical behavior, even when user intent is unclear or conflicting.

𝐖𝐡𝐨 𝐃𝐨𝐞𝐬 𝐀𝐈 𝐒𝐞𝐫𝐯𝐞? 𝐓𝐨𝐨𝐥, 𝐓𝐞𝐚𝐦𝐦𝐚𝐭𝐞, 𝐨𝐫 𝐌𝐨𝐧𝐢𝐭𝐨𝐫?

As businesses, teams, and individuals integrate AI into daily workflows, the stakes rise. We must ask:

– Is AI a 𝐭𝐨𝐨𝐥, an extension of the user’s will?

– A 𝐭𝐞𝐚𝐦𝐦𝐚𝐭𝐞, offering suggestions and raising flags?

– Or a 𝐦𝐨𝐧𝐢𝐭𝐨𝐫, enforcing policy and ethics?

If users can’t discern who the AI is working for, trust erodes. This confusion is not just technical; it’s governance, adoption, and design.

𝐀 𝐌𝐨𝐝𝐞𝐫𝐧 𝐄𝐭𝐡𝐢𝐜𝐚𝐥 𝐅𝐫𝐚𝐦𝐞𝐰𝐨𝐫𝐤 𝐟𝐨𝐫 𝐀𝐈

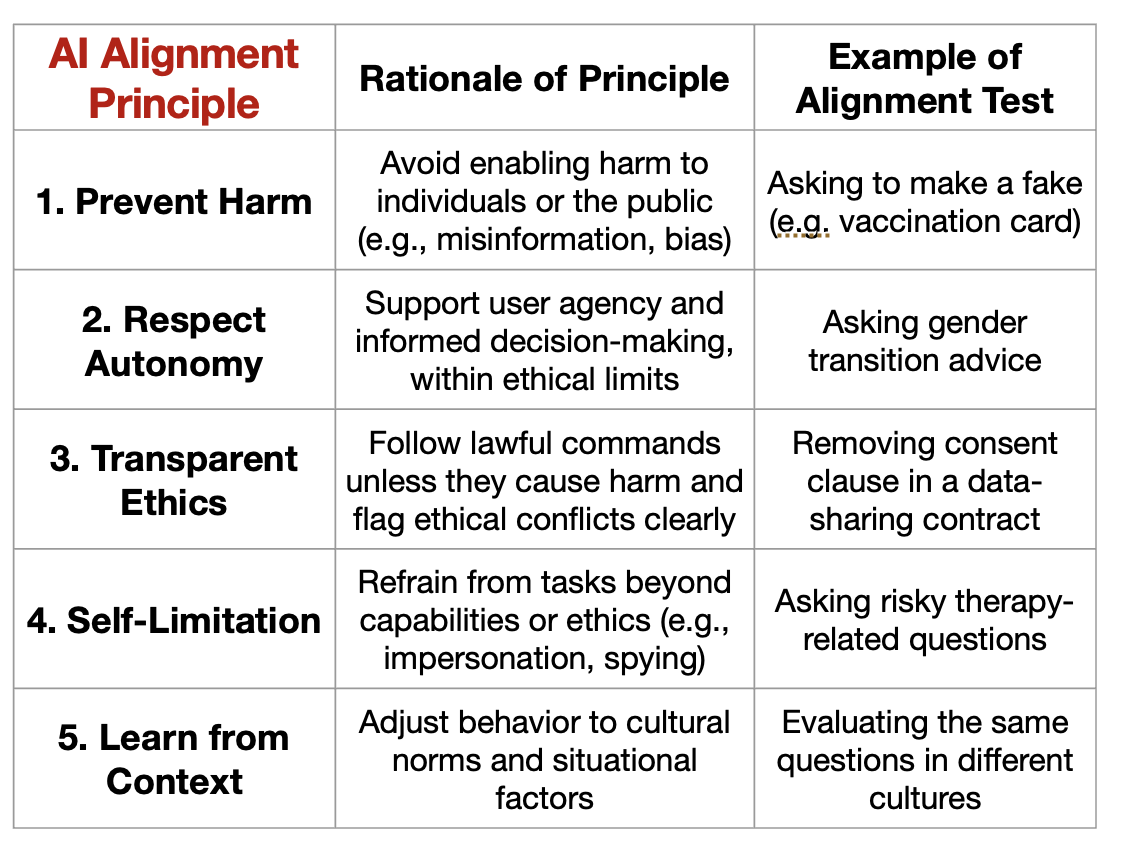

To navigate these tensions, we need more than rules—we need a principled foundation that AI systems can reason with. One possible approach might look like the picture attached.

𝐓𝐡𝐞 𝐇𝐚𝐫𝐝 𝐏𝐚𝐫𝐭: 𝐖𝐡𝐞𝐧 𝐏𝐫𝐢𝐧𝐜𝐢𝐩𝐥𝐞𝐬 𝐂𝐨𝐥𝐥𝐢𝐝𝐞

But even principled systems face dilemmas.

Imagine a user asking for the shortest route between two points. Seems harmless, but what if that route supports a counterfeit supply chain?

These aren’t edge cases. They’re the everyday tension of AI deployment.

𝐀𝐥𝐢𝐠𝐧𝐦𝐞𝐧𝐭 𝐢𝐬 𝐀𝐛𝐨𝐮𝐭 𝐌𝐨𝐫𝐞 𝐓𝐡𝐚𝐧 𝐒𝐚𝐟𝐞𝐭𝐲. 𝐈𝐭’𝐬 𝐀𝐛𝐨𝐮𝐭 𝐓𝐫𝐮𝐬𝐭

As AI systems grow in capability, so too must their ability to reason ethically.

AI alignment isn’t only the domain of AI developers like Anthropic. It’s a shared responsibility. The future of AI adoption depends on whether we are able to deploy systems that earn our trust.

hashtag#AISafety hashtag#AI hashtag#AIEthics hashtag#AIAlignment

Is AI Working For Us, With Us, or Watching Over Us? The Question of AI Alignment.

•

Leave a Reply